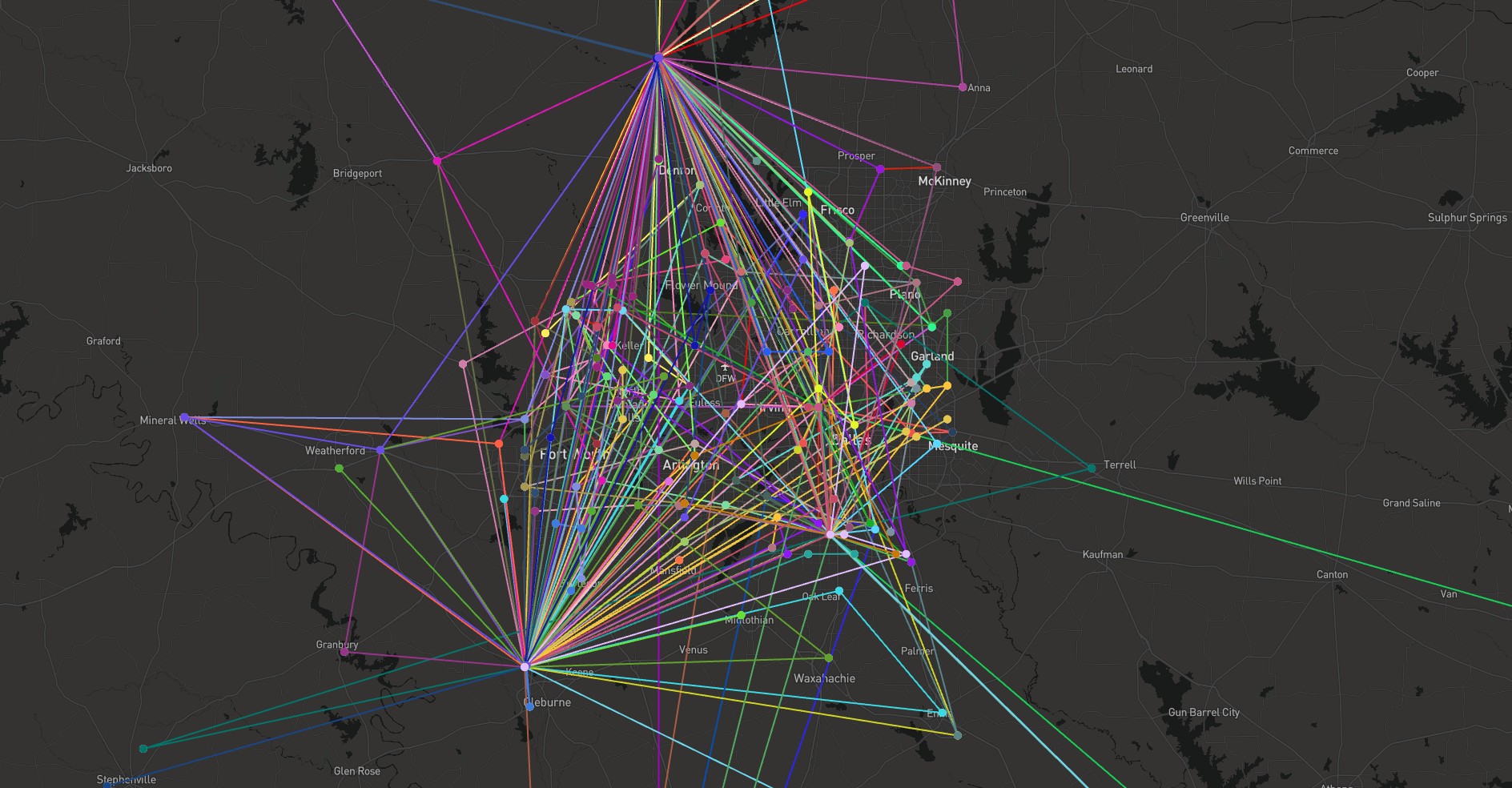

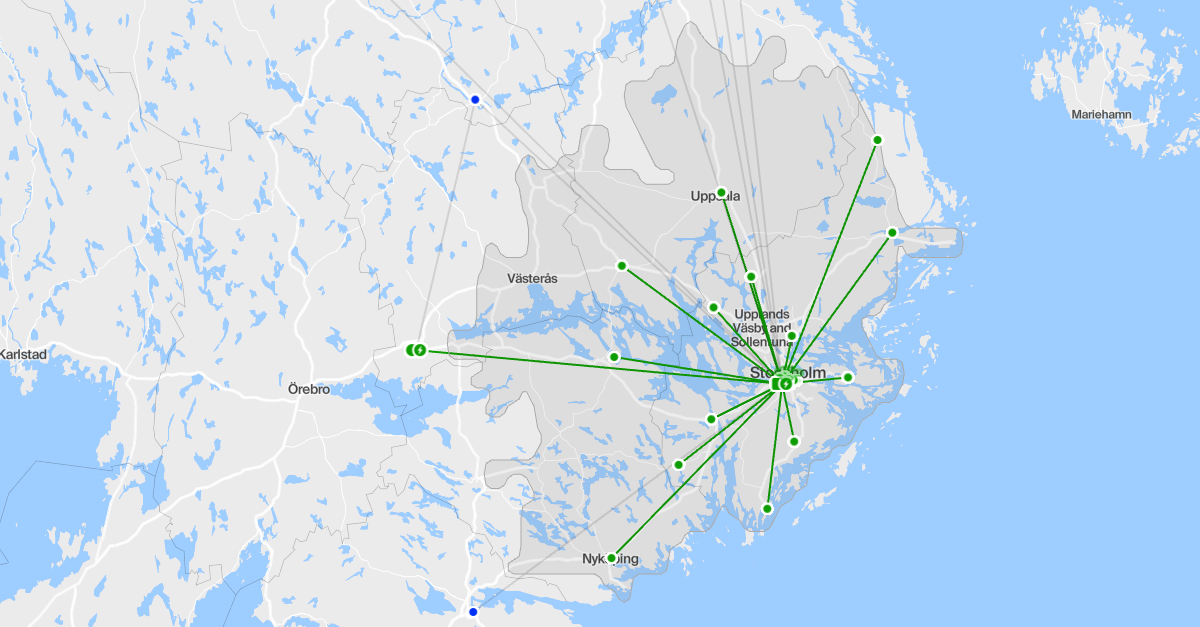

The key to reliable and cost-efficient electric freight lies in accurate range modeling, also known

as energy consumption modeling. In this blog post, we outline Einride's approach to collecting and

processing electric truck data to implement range models that consistently achieve over 90%

accuracy.

To reach international targets of reduced global warming, the transport industry needs to accelerate

its transition towards electric. In order to go electric at scale you need to allow for a mixed

brand fleet setup. You also need accurate range predictions for these brands. Given that different

truck manufacturers use different data models makes this challenging. What if we could define a

standard data format that we could map all truck data to, regardless of brand? Read on to learn how

Einride is working with standardizing data to enable accurate and unbiased range models.

The data used when training range predictive models

has a significant impact on transport planning

The data used when training range predictive models

has a significant impact on transport planning

The data used when training range predictive models

has a significant impact on transport planning

The data used when training range predictive models

has a significant impact on transport planning